Motion Capture - General

Faceit includes interfaces for recording and importing animation data from various motion capture applications. All Faceit motion capture operators are streamlined, so it's easy to switch between the different capturing workflows and combine motions from different sources.

Faceit comes packed with a bunch of interfaces and utilities that allow to import recorded animations or even record new animations live in the Blender viewport. Faceit supports the popular and accessible ARKit, Hallway Tile and Audio2Face platforms for high quality performance capture. The animation recorded by either of these apps can be retargeted to FACS based shape keys, like the ARKit or Audio2Face expression sets. Faceit does not offer any interfaces for bone based motion capture. A bunch of apps for PCs, Macs and iOS devices are supported with easy to use interfaces.

Quickly receive and record animation data from various sources in real-time.

Additional costs might occur. Please read below for more information on the individual hardware and software requirements.

Apple ARKit

The ARKit (Apple ARKit) is Apple’s augmented reality (AR) development platform for iOS mobile devices. The ARKit Face Tracking is a very powerful and easy to use tool that is shipped to all iOS devices with the True Depth camera (see hardware). The ARKit Face Tracking captures 52 micro expressions in an actors face in realtime. Any 3D character that is equipped with a set of shape keys resembling the 52 captured micro expressions can be animated with the ARKit. Next to the creation of the 52 required shape keys, Faceit also provides a collection of tools that ease the performance capture process right inside Blender.

Hardware

All ARKit applications listed below can only be used on iOS devices with the True Depth camera. The True Depth camera is available on the following devices:

- iPhone X, XR, XS, XS Max, 11, 11 Pro, 11 Pro Max, iPhone12, iPhone13...

- iPad Pro 3rd, 4th, 5th generation...

Software

This section will give a quick overview of the iOS apps that utilize the ARKit Face Tracking features. Note that all these apps use the same capturing framework built into the ARKit and therefore there shouldn't be any difference in the captured data. Some apps offer additional features, like live streaming animation data, recording and exporting data or amplifying individual expressions to make them more or less expressive.

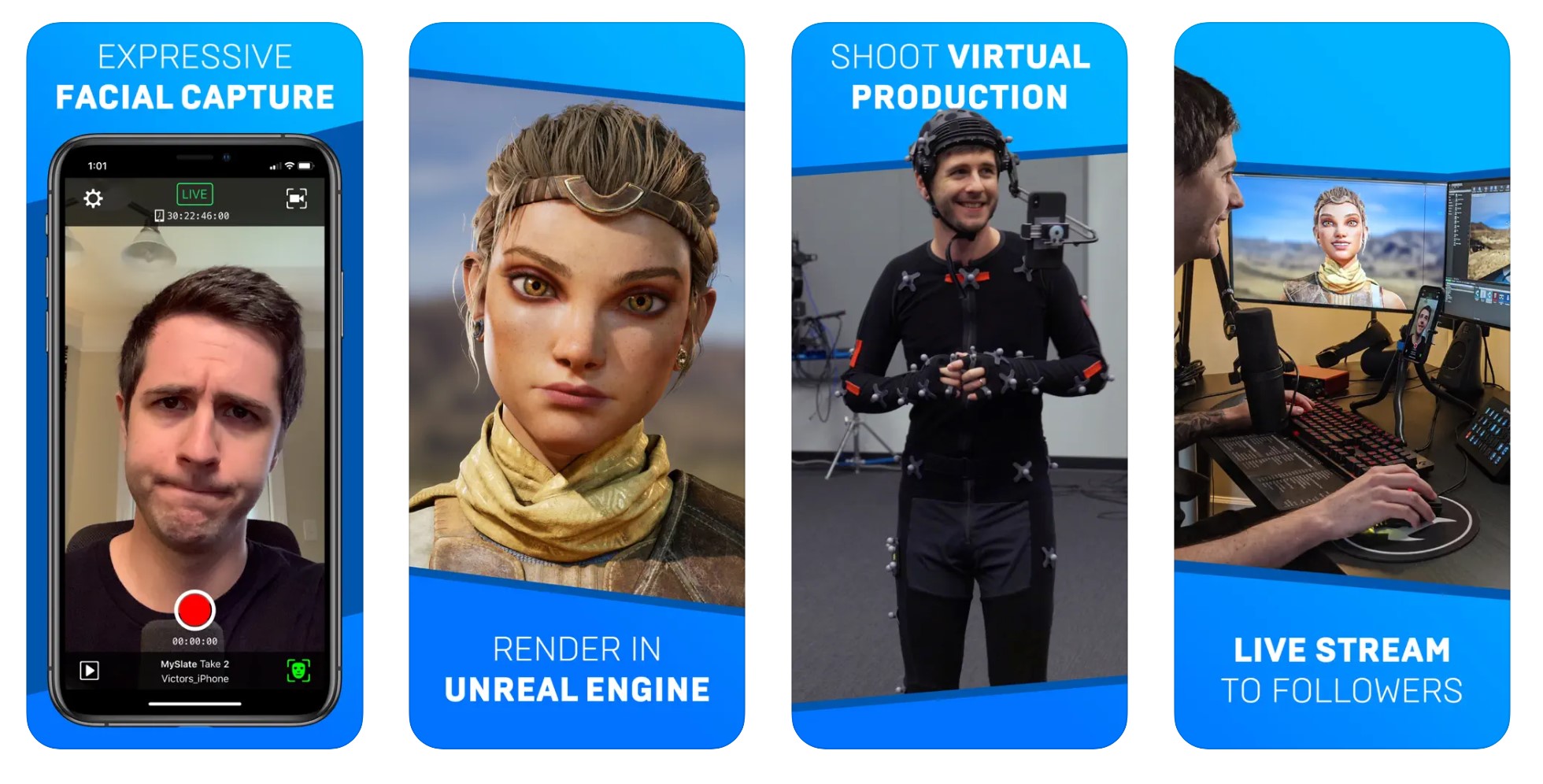

Live Link Face

No matter if you are using the Unreal Engine or not, the Live Link Face App by Epic Games provides a great way to quickly record or stream ARKit data to vraious applications, including Faceit.

Get the app here

Find the documenation hereImport to Faceit

Face Cap App

Stream animation data live via OSC or record your performance and export the result to FBX or TXT.

Get the app here

Find the documenation hereImport to Faceit

- Import text file format

- Record live.

- Retarget motions from FBX.

In-App Purchases

The Face Cap app itself is free-to-use, but the live streaming functionality is time capped to 5 seconds. You can unlock unlimited streaming via an in-app purchase (34.99$ - price may vary based on your region).

iFacialMocap

iFacialMocap is another app that allows you to conveniently capture ARKit data on iOS devices. You can quickly record animations and also recieve and record directly in Faceit.

Checkout the website for more information and download links!Import to Faceit

In-App Purchases

Some features are only available with an in-app purchase.

Other ARKit Apps

There is a range of other iOS apps that can be used for recording ARKit Face Tracking data. The following list is not exhaustive, but gives an overview of the available apps that offer interfaces to Blender (not within Faceit, but with stand-alone add-ons or other methods).

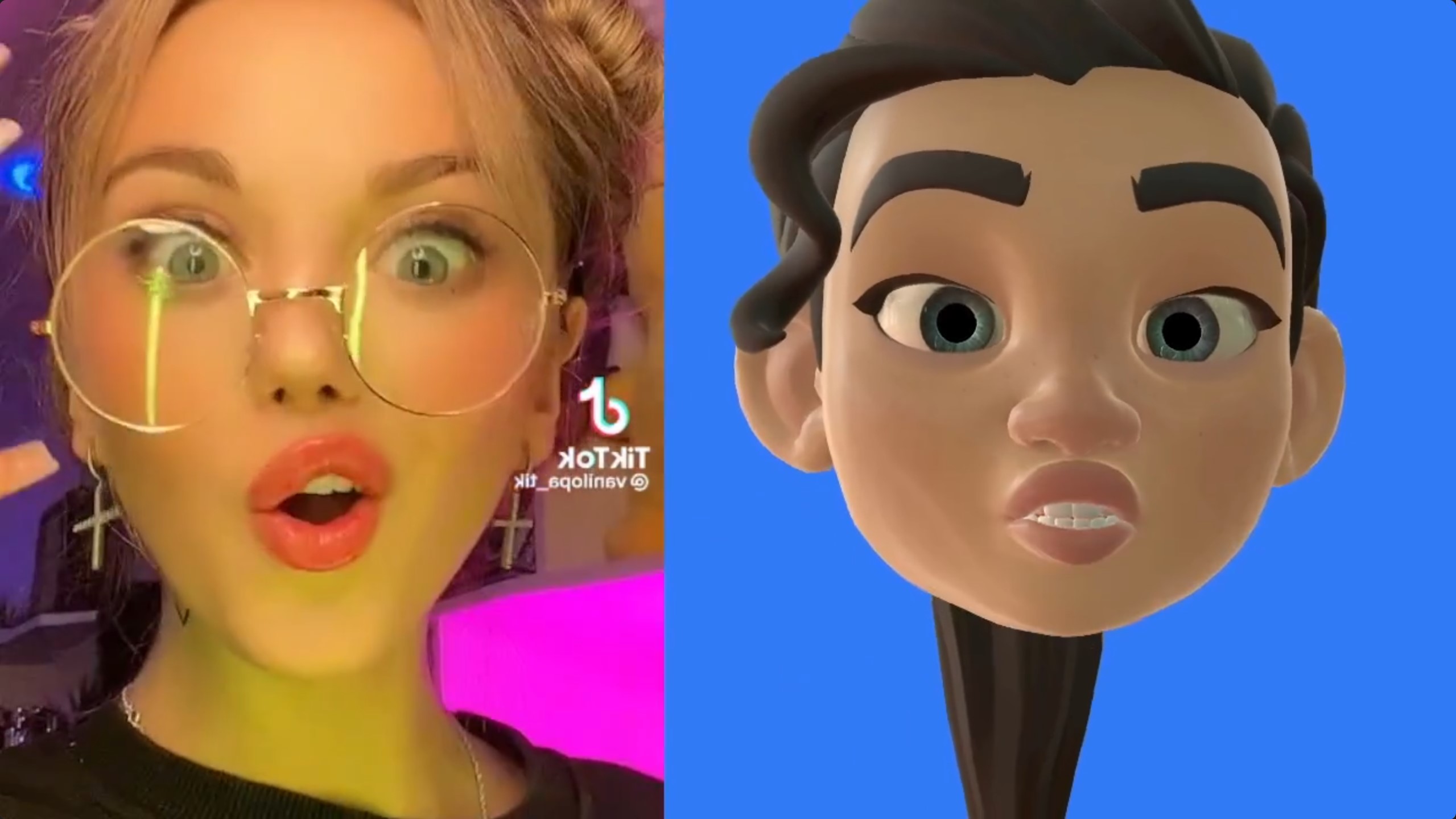

Facemotion3D

With Facemotion3D, you can capture facial expressions with an iOS app and communicate in real time with 3DCG software on your PC. You can also FBX export the recorded animation data. You can also import VRM format files into the iOS app.

Get the app here.

Find more information here.

Get the Blender Add-on here.In-App Purchases

Some features are only available with an in-app purchase.

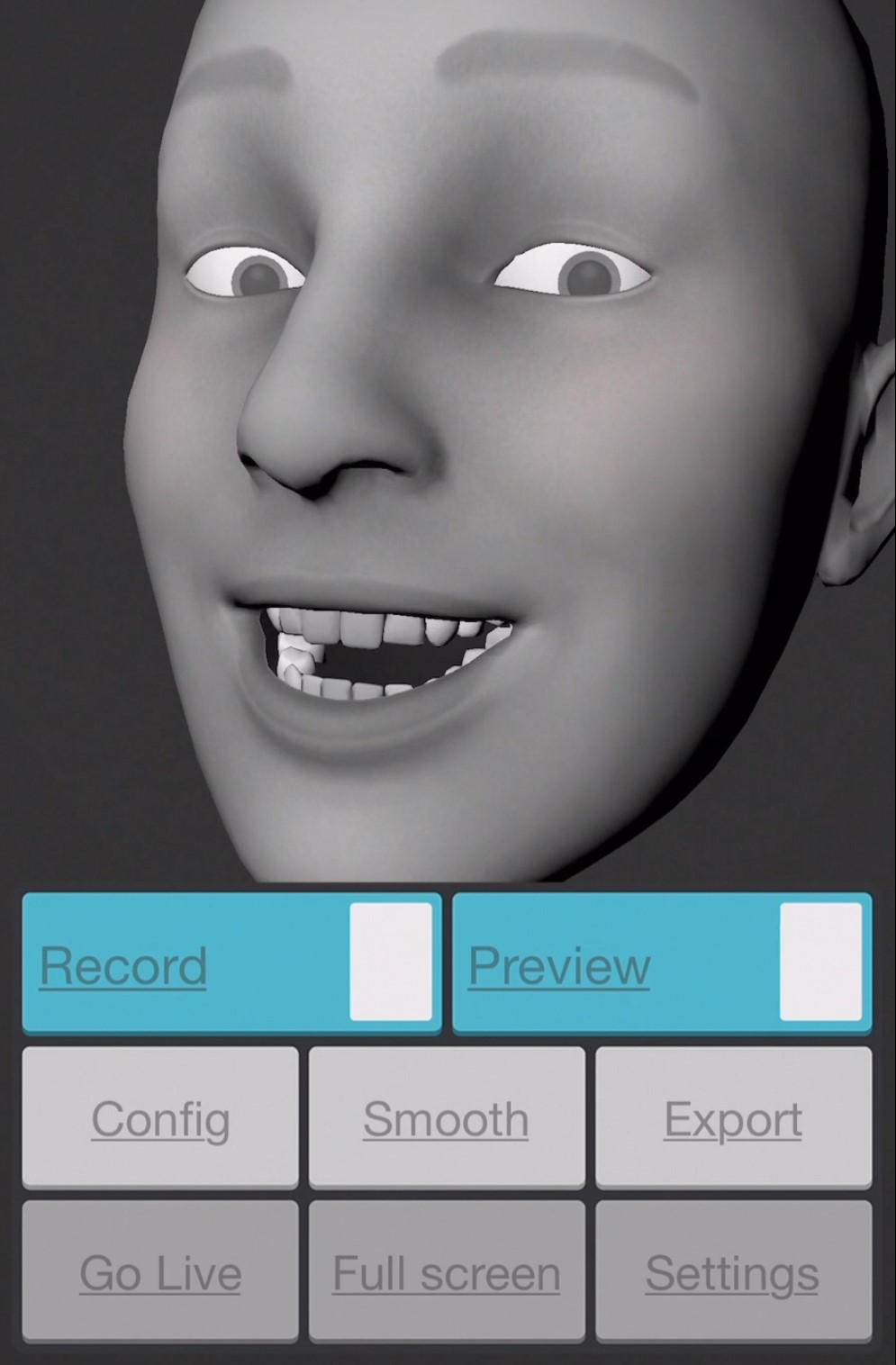

BMC

The App BMC - Blender Motion Capture can be used to record facial motion and send it to your computer via email. An Add-on is provided to load the motion in your Blender scene.

Hallway Tile

Hallway's Tile is a desktop app that allows to capture the 52 ARKit expressions with a regular webcam in a stunning quality. Faceit provides a direct interface for live capturing and recording the facial expressions in the Blender viewport.

Hallway Tile runs on Windows, Mac and Linux computers. You merely need a regular webcam. Of course, the quality of the captured data depends on the resolution and framerate of the webcam.

Head to Hallway right now and start capturing your facial expressions.

Import to Faceit

- Record live via OSC.

Face Landmark Link

Face Landmark Link is a user built, free and open source application that allows to record ARKit data from video sources and also live from a webcam feed. The software uses Googles media pipe to extract the animation data.

ARKit data from any 2D video source. Recorded or realtime.

Import to Faceit

The Face Landmark Link app can output CSV files compatible with the Live Link Face Importer and also stream live link face data to in real-time. A tutorial can be found here

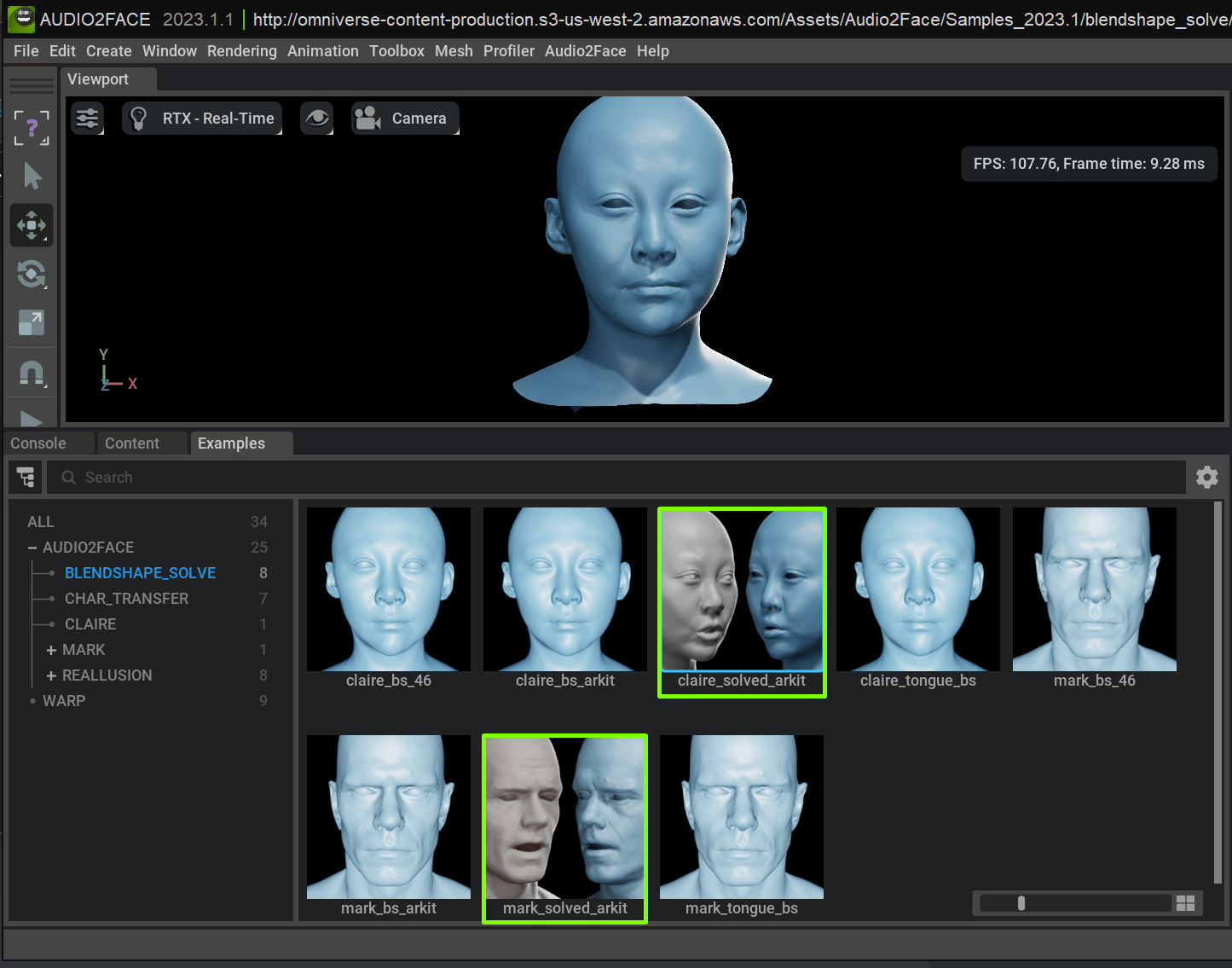

Nvidia Audio2Face

Nvidia's Audio2Face is a combination of AI based technologies that generates facial motion and lip sync that is derived entirely from an audio source. It can be used at runtime or to generate facial animation for more traditional content creation pipelines. The resulting blendshape weights can be exported to .json files which can in turn be imported into Blender via Faceit. Audio2Face is part of the Nvidia Omniverse Platform.

Import to Faceit

Tip

The new Audio2Face version comes with ARKit solvers as well, so you can now also import A2F data directly to the Faceit control rig.

Hardware

RTX only

For now, Audio2Face requires the usage of an Nvidia RTX video card and a Windows operating system. See this page for details on hardware requirements.

General Retargeting (FBX)

For convenience, Faceit also provides a set of tools that allow to retarget any type of shape key animation to other characters. Read more here.

Import to Faceit

If you want to import blendshape animation data from other sources, it's often the easiest to import an FBX with the animation. Faceit comes with the convenient Shape Key Retargeter, so you can quickly rename the data paths in the action itself to match your character model.